The machine that builds the machine

Rob Farrow, Head of Engineering at Profusion

I think we’ve all got it wrong. Ok bit of a bold statement, it’s not quite wrong, but I do think we could more clearly explain what it is we do in data, and more specifically, why you probably need it. A lot of the time we get bogged down in business speak & corporate jargon.

If you google the benefits of a datalake, you get the right answer, but it’s a subset of the real reason, the most powerful reason of all. Some articles or blogs allude to it, but its rare for it to be stated clearly.

The main benefit of a data platform is not just the insight itself, but the speed to getting it and the agility this gives your organisation.

That’s it.

The machine that builds the machine?

Elon Musk, for all of his sins, did get a few things right, and one of these was when scaling Tesla was by focusing not on the car, but the factory itself as the primary product and the car as secondary.

(One day I will shut up about Tesla — today is not that day).

Tesla: Building the machine that builds the machine

This could be seen as an intensely subtle change in approach, and yet the impacts are profound. The impacts of this took the automotive industry by surprise, and the rest are still catching up today.

$2.24 billion loss in 2017 to $12.6 billion profit in 2022.

That’s a pretty intense curve.

This is an abstraction on a huge scale. Abstractions are quite common in software, and more often than not we get it wrong, most technical debt is probably down to an incorrect abstraction one way or the other. I often try to explain abstraction as a ladder, and really this stems from my understanding I gained from this article which is beautifully illustrated here.

So what is a a data platform?

A data platform is actually an abstraction, but an abstraction over your business insight. Instead of getting insights, you’re getting the ability to get insight faster. Your data platform is the machine that builds the machine.

Data has become increasingly more fragmented over time, as businesses grow, and occupy new niches, they tend to fragment their operations into multiple applications. What used to be just a single customer management system, now is 2 or even 3, because one handles people in store, one handles people signing online on the website etc etc. This has happened across the board.

So data platforms are a way to give a business a view on this stuff, pull it all into one place so you can actually calculate just how many customers you actually have, sounds simple right? Well it’s usually not, because in reality, these systems overlap. A customer in a store may well have signed up online — how do you identify these overlaps, and get a real number of how many customers you have?

Hence data platforms, these pull all your critical business data into one place, but importantly, will represent your business rules — in code.

You often hear talk about datawarehouses, datalakes, datafabrics and datameshes (amongst others), but what actually are these? How does this relate to all the crap I wrote above? Do I even know what I’m talking about? The answer to all these questions is of course no.

Fail fast you must

These architectures matter because these are the machines that build your machine, and getting this right is key to enabling your business. These foundations are what you forge the decisions that steer your business.

Want a dashboard to show key areas of your business? It should be on your data platform.

Build some machine learning to automate some of your business decisions? Data platform.

Making decisions about your customers? Data platform.

Want to understand your business finances? Data platform.

Without these foundations you’re going nowhere fast. You can limp along for a bit without, but this will soon spiral out of control if your business grows.

As time has marched on, data has gotten more complex, more fragmented, so the architectures of today prioritise speed, datalakes are faster to build than warehouses, faster to get to that MVP (minimum viable product).

Failing fast is absolutely critical, you will get it wrong the first time you do this, maybe even the first couple of times. This stuff is just too complicated and too deep to truly remove all ambiguities, we do what we can, but in truth, we know all along we’re doomed to fail. (see my other blog on failure!).

So what can we do? We can fail it fast.

We build in such a way that everything is decoupled, bits can be replaced, and every care is taken to ensure that software is interoperable as far as possible before the immutable parts are built.

This is a bit about architecture and design, but mostly — it’s about investing into our data teams. So what about architecture?

Flavours of Platforms

These are just different ways of building these platforms, different architectures that all aim to ultimately do the same thing. They’re just different flavours of the same abstraction.

Datawarehouses

The first big one was Datawarehousing, pioneered back in the 80s or so (before my time!), businesses used to ride those babies for miles. Basically the idea was to build a bigger database that is built on all your other databases. Pull the data straight from your sources, change it to make it more user friendly on the way in, and then its all in one place. Super simple.

This would be like downloading a file, then changing it after you’ve moved it. If you did your changes wrong, you’re downloading it again.

People found datawarehouses to be very slow to build, and quite ‘brittle’ and difficult to change, as everytime you change anything at all, you’re pulling the data from your source system AGAIN.

Datalakes

Datalakes are a little different to fix this problem, the idea here is that you copy each of your sources into almost replica datasets, and then change it to be more user friendly after that. All this means in principle is that everytime you need your data you’re pulling it from the ‘replica’ and not straight from the source, meaning that you have effectively ‘decoupled’ your ingestion (reading the data in) from your changes to the data.

Basically this would be the same as downloading your file, then making a copy and changing that copy. If your changes are wrong — you don’t have to download it again!

Datalakehouse

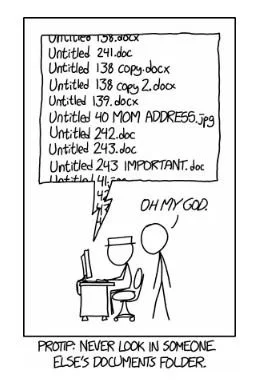

Datalakehouses were born out of the messes that were made by datalakes. Really a datalake is nothing more than a folder of allllll your companies data, and you don’t have to be a genius to work out how that’s going to go.

Pretty much this. Without structure and principles, this is how it goes. This in the data field is canonically known as the dataswamp, it essentially becomes an unmaintainable mess of copied data, with no real control, and then suddenly you have 15 versions of the same data which are all slightly different.

So a datalakehouse will try and fix some of this, by adding some metadata management (data about your data — column types and the like), layers beyond just land all your data into a folder, and just generally a bit of order to the chaos.

This is the most common pattern/flavour of data platform nowadays.

Datamesh

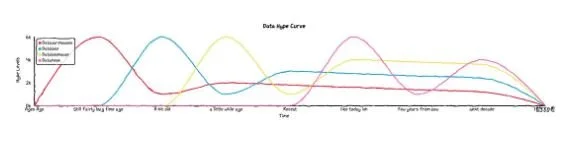

There is however a new entry design pattern on the scene. The datamesh is right at the top of its hypecurve now, and you’ll read all sorts of articles about the promises of the mesh (well — you may not if you don’t live in my world, but they definitely exist).

The datamesh has come around for one main reason, building a datalakehouse is hard, and it gets exponentially more and more difficult as your business has more data. So maybe instead of building a single datalakehouse, you build a dozen smaller ones and then join them together!

In a few years, these come out the other side of the curve and their true value will be found. At the moment I think this is buzzword bingo.

The machine that builds the machine that builds the machine

Much to my annoyance, I’m not the first to come up with this concept. The machine that builds the machine that builds the machine. I assume Musk hasn’t seen this yet, otherwise he’d be building it as we speak.

So here is my proposal. When you think about the data that your company has, the most value you will get is not to focus on the machine (your business decisions), or the machine that builds that machine (your data platform), but the machine that builds the machine that builds the machine (your data team).

Focus on empowering & upskilling your data team. Getting this stuff right is hard, but what we’re learning now is that a lot of these problems are not new. Software engineering has been doing this for a long time, and has learnt that the best way to tackle complex problems is with good engineering practices, code reviews, pull requests, decoupling, version control, unit tests & swathes more.

If your data engineers start to see themselves as really just specialised software engineers — and recognise the value of this, and your business does too, then your machine that builds the machine that builds the machine is on the right track.

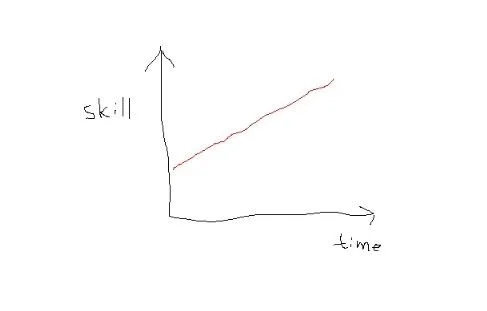

Or as one of my team has so artfully diagrammed.